Implementing DNSSEC for a couple of years now while playing with many different DNS options such as TTL values, I came around an error message from DNSViz pointing to possible problems when the TTL of a signed resource record is longer than the lifetime of the DNSSEC signature itself. Since I was not fully aware of this (and because I did not run into a real error over the last years) I wanted to test it more precisely.

For testing purposes I have a few DNS names with really long TTLs such as

ttl-52w.weberdns.de or

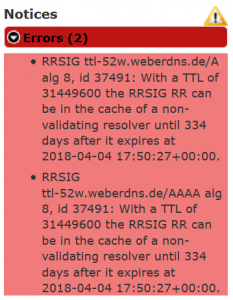

ttl-max.weberdns.de. Analyzing those names with DNSViz, the following error message is shown: “RRSIG ttl-52w.weberdns.de/A alg 8, id 37491: With a TTL of 31449600 the RRSIG RR can be in the cache of a non-validating resolver until 334 days after it expires at 2018-04-04 17:50:27+00:00.” Remember that each DNS resource record has a TTL value. Common periods are 1 d = 86400 s, 8 h, 6 h, 1 h, or even less. Those TTLs do also apply for the RRSIG resource records. However, those RRSIGs have two more (absolute) timestamps: signature expiration and signature inception – RFC 4043, section 3.1.5. By default, BIND uses the following timing: “The default base interval is 30 days giving a re-signing interval of 7 1/2 days.” That is: A caching resolver that is not aware of those DNSSEC related timestamps will cache the RRSIG as long as its TTL, which is MUCH LONGER in my two DNS name examples than the 30 days of the valid signature!

For testing purposes I have a few DNS names with really long TTLs such as

ttl-52w.weberdns.de or

ttl-max.weberdns.de. Analyzing those names with DNSViz, the following error message is shown: “RRSIG ttl-52w.weberdns.de/A alg 8, id 37491: With a TTL of 31449600 the RRSIG RR can be in the cache of a non-validating resolver until 334 days after it expires at 2018-04-04 17:50:27+00:00.” Remember that each DNS resource record has a TTL value. Common periods are 1 d = 86400 s, 8 h, 6 h, 1 h, or even less. Those TTLs do also apply for the RRSIG resource records. However, those RRSIGs have two more (absolute) timestamps: signature expiration and signature inception – RFC 4043, section 3.1.5. By default, BIND uses the following timing: “The default base interval is 30 days giving a re-signing interval of 7 1/2 days.” That is: A caching resolver that is not aware of those DNSSEC related timestamps will cache the RRSIG as long as its TTL, which is MUCH LONGER in my two DNS name examples than the 30 days of the valid signature!

Testing with Unbound

For test purposes I queried two different local Unbound DNS servers in my lab every 15 minutes for this ttl-52w.weberdns.de name. However, for some reasons the decreasing TTL was always reset to its initial value after 1 d = 86400 s. For example, the following two lines show the DNS answer with the lowest TTL before it was reset to the initial value:

|

1 2 |

ttl-52w.weberdns.de. 31363200 IN RRSIG A 8 3 31449600 ttl-52w.weberdns.de. 31449600 IN RRSIG A 8 3 31449600 |

That is: Even though Unbound uses the really high and uncommon TTL value of 52 w, it does not fully decrement it until 0, but only for 86400 seconds. Later on I had a look at the default setting of “cache-max-ttl” which indeed revealed this value:

|

1 2 |

weberjoh@jw-vm09-nmap:~$ sudo unbound-checkconf -o cache-max-ttl 86400 |

I also wanted to test my scenario with a default BIND server used as a caching resolver in my network. However, the maximum TTL for all queries BIND stores was 604800 s = 7 d. And since I did not want to change this default behaviour on my production, nor installing another BIND in my lab, I only tried it with Unbound.

Max TTL on Public Caching Servers

I also tried this scenario with public DNS resolvers. But they don’t use the real TTL values coming from the authoritative DNS servers, but own ones. In the following listing you can see some TTLs of well-known caching servers from Deutsche Telekom (my ISP), Google Public DNS, Quad 9, and OpenDNS, measured with dnseval out of the DNSDiag suite:

|

1 2 3 4 5 6 7 8 9 10 |

weberjoh@nb15-lx:~/dnsdiag$ ./dnseval.py -f ../dns-server2 ttl-52w.weberdns.de server avg(ms) ttl flags -------------------------------------------------------------------- 194.25.0.68 2.589 85567 QR -- -- RD RA AD -- 2003:40:2000::53 3.312 85567 QR -- -- RD RA AD -- 8.8.8.8 9.174 20768 QR -- -- RD RA AD -- 2001:4860:4860::8888 9.334 20767 QR -- -- RD RA AD -- 9.9.9.9 3.077 31448769 QR -- -- RD RA AD -- 2620:fe::fe 4.229 31448769 QR -- -- RD RA AD -- 208.67.222.222 3.463 528882 QR -- -- RD RA -- -- |

As you can see:

- Telekom (first two lines) starts probably at 86400 s = 24 h,

- Google (line 3+4) at 21600 s = 6 h,

- Quad 9 (line 5+6) indeed at 31449600 s = 52 w, and

- OpenDNS (last line) probably at 604800 s = 7 d.

That is: The only resolver that (probably) caches those long TTLs is Quad 9. However, since Quad 9 does DNSSEC validation, I couldn’t test my scenario with them. (Note that Quad 9 does DNSSEC validation on both of its servers, though documented differently on some resources; reference.) Furthermore note, that the TTLs from Quad 9 are not equal decreasing all the time, as the following listing shows. One out of four servers from Quad 9 returned a different TTL than the others:

|

1 2 3 4 5 6 7 |

weberjoh@nb15-lx:~/dnsdiag$ ./dnseval.py -f ../dns-server2 ttl-52w.weberdns.de server avg(ms) ttl flags -------------------------------------------------------------------- 9.9.9.9 4.320 31447682 QR -- -- RD RA AD -- 2620:fe::fe 5.405 41367 QR -- -- RD RA AD -- 9.9.9.10 4.344 31447682 QR -- -- RD RA AD -- 2620:fe::10 3.460 31447682 QR -- -- RD RA AD -- |

Thinking About It

Revising the scenario again. Hm. At what point exactly should we run into a problem? It seems like DNSSEC validating resolvers won’t have a problem with the too long-living TTL at all since they are focusing on the “signature expiration timestamp”. But for non-validating resolvers the RRSIG is not of interested anyway. And since the TTL for the mere A or AAAA record is set to an almost never-ending value, the actual IP addresses are not expected to change within that time. So, what could cause a problem?

Maybe it’s about this scenario: A DNSSEC validating server is configured as a recursive server rather than working as an iterative server, hence it forwards its DNS queries to a a non-validating DNS server. In this case, the DNSSEC server could get an RRSIG that already timed out from the non-DNSSEC server. However, at least for Unbound this is not working again, as I tried it in my lab. At the moment I configured it to forward all queries:

|

1 2 3 |

forward-zone: name: "." forward-addr: 208.67.222.222 |

while DNSSEC validation was still enabled, I was not able to use it anymore at all:

|

1 2 3 4 |

Mar 7 16:00:53 jw-vm09-nmap unbound: [2803:0] info: failed to prime trust anchor -- DNSKEY rrset is not secure . DNSKEY IN Mar 7 16:00:53 jw-vm09-nmap unbound: [2803:0] info: failed to prime trust anchor -- DNSKEY rrset is not secure . DNSKEY IN Mar 7 16:00:53 jw-vm09-nmap unbound: [2803:0] info: failed to prime trust anchor -- DNSKEY rrset is not secure . DNSKEY IN Mar 7 16:00:53 jw-vm09-nmap unbound: [2803:0] info: failed to prime trust anchor -- DNSKEY rrset is not secure . DNSKEY IN |

Citing the pfSense documentation concerning Unbound: “Forwarding mode may be enabled if the upstream DNS servers are trusted and also provide DNSSEC support.” That is: I was not able to construct an explicit problem as well.

Conclusion

To my mind it’s quite good that I was not able to produce a DNS cache error. Of course as long as you’re using DNSSEC aware resolvers. Anyway, DNS TTLs longer than 30 days are quite uncommon nowadays. Well-known services tend to use TTLs smaller than 1 hour. Hence I don’t expect to run into timing issues at all.

Furthermore, since the cache problem DNSViz refers to is only true for NON-validating DNS server (which obviously don’t do DNSSEC), we are not facing a problem at all. If you really have some DNS names with such long-living TTLs, you’re not gonna change the mere A/AAAA/whatever record anyway. So I would expect that you will have problems one day resulting from a too long-living TTL rather than from a non-validating DNS server used for DNSSEC validation. ;)

However, if you ever ran into such DNSSEC TTL issues, please drop me a comment below!

Featured image “Es ist vorbei! 168/365” by Dennis Skley is licensed under CC BY-ND 2.0.

Thanks so much! :)